Overview

Note

This project is continually being developed and improved. Expect changes to this manual, the project code, and the project design.

Open Federated Learning (OpenFL) is a Python* 3 project developed by Intel Internet of Things Group (IOTG) and Intel Labs.

Federated Learning

What is Federated Learning?

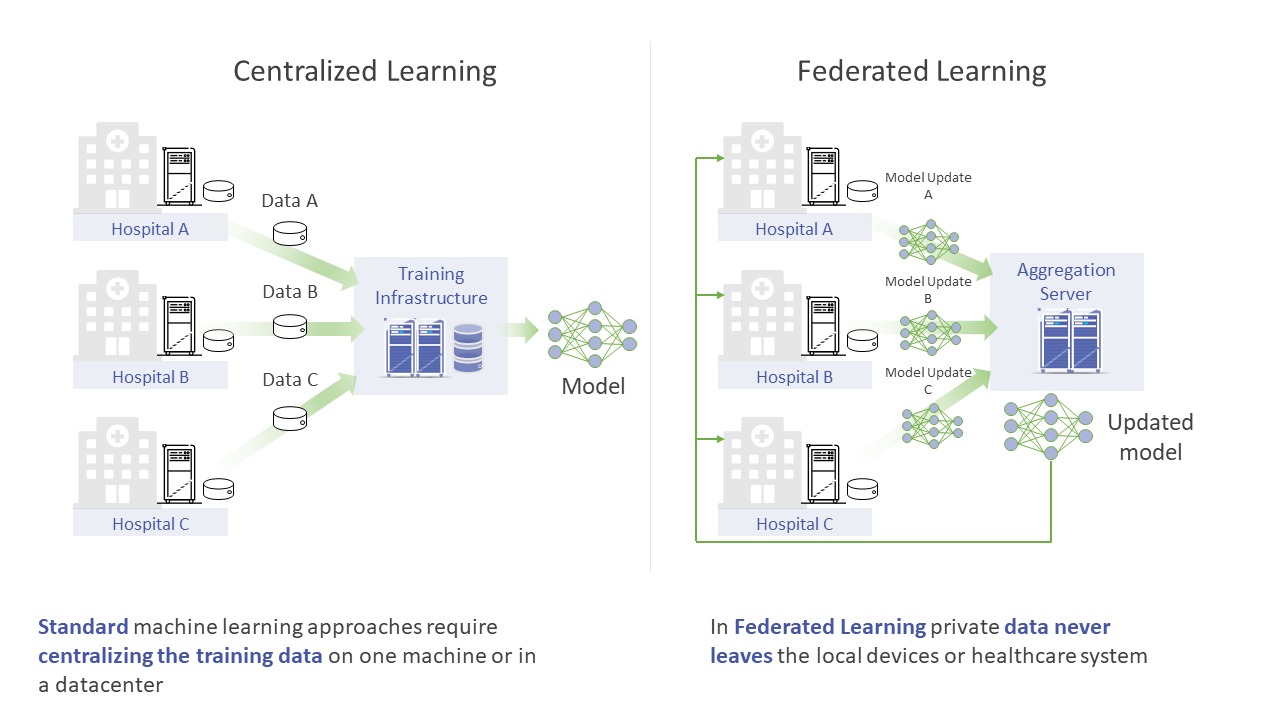

Federated learning is a distributed machine learning approach that enables collaboration on machine learning projects without sharing sensitive data, such as patient records, financial data, or classified secrets (McMahan, 2016; Sheller, Reina, Edwards, Martin, & Bakas, 2019; Yang, Liu, Chen, & Tong, 2019; Sheller et al., 2020). In federated learning, the model moves to meet the data rather than the data moving to meet the model. The movement of data across the federation are the model parameters and their updates.

Federated Learning

Definitions and Conventions

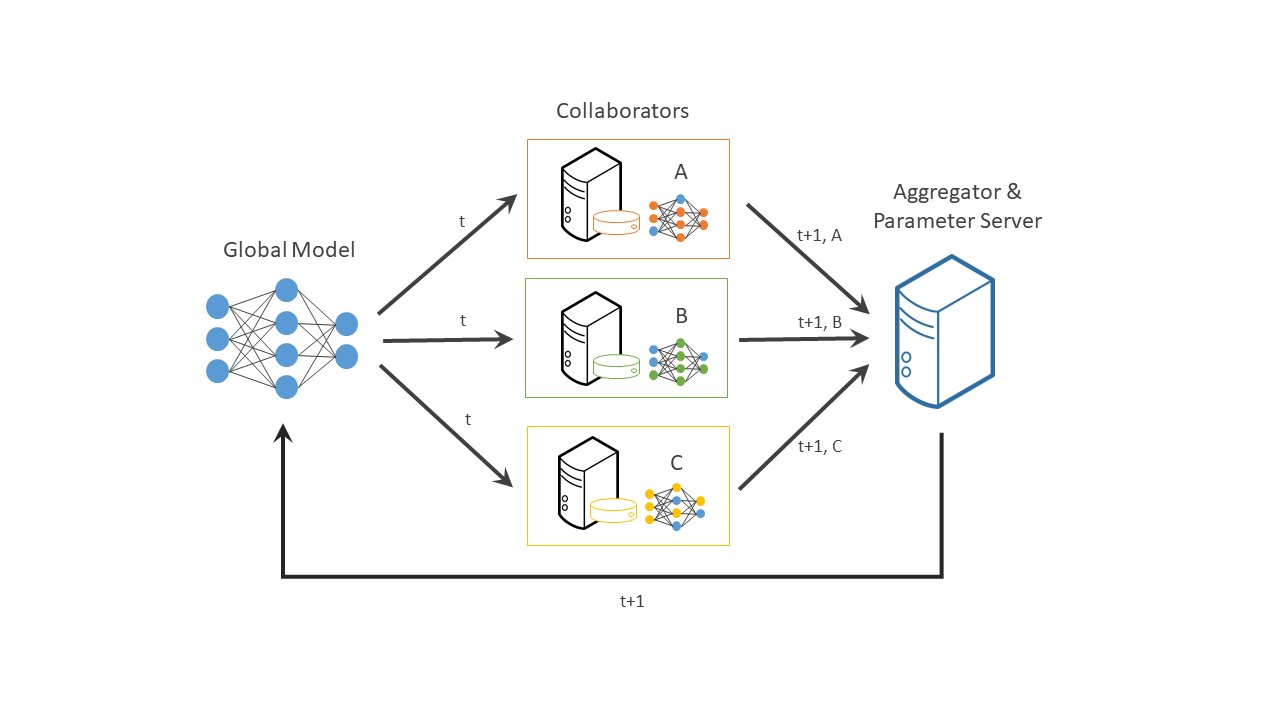

Federated learning brings in a few more components to the traditional data science training pipeline:

- Collaborator

A collaborator is a client in the federation that has access to the local training, validation, and test datasets. By design, the collaborator is the only component of the federation with access to the local data. The local dataset should never leave the collaborator.

- Aggregator

A parameter server sends a global model to the collaborators. Parameter servers are often combined with aggregators on the same compute node. An aggregator receives locally tuned models from collaborators and combines the locally tuned models into a new global model. Typically, federated averaging, (a weighted average) is the algorithm used to combine the locally tuned models.

- Round

A federation round is defined as the interval (typically defined in terms of training steps) where an aggregation is performed. Collaborators may perform local training on the model for multiple epochs (or even partial epochs) within a single training round.